Sally Caulwell for Quanta Magazine

Since the start of the 20th century, the heart of mathematics has been the proof — a rigorous, logical argument for whether a given statement is true or false. Mathematicians’ careers are measured by what kinds of theorems they can prove, and how many. They spend the bulk of their time coming up with fresh insights to make a proof work, then translating those intuitions into step-by-step deductions, fitting different lines of reasoning together like puzzle pieces.

The best proofs are works of art. They’re not just rigorous; they’re elegant, creative and beautiful. This makes them feel like a distinctly human activity — our way of making sense of the world, of sharpening our minds, of testing the limits of thought itself.

But proofs are also inherently rational. And so it was only natural that when researchers started developing artificial intelligence in the mid-1950s, they hoped to automate theorem proving: to design computer programs capable of generating proofs of their own. They had some success. One of the earliest AI programs could output proofs of dozens of statements in mathematical logic. Other programs followed, coming up with ways to prove statements in geometry, calculus and other areas.

Still, these automated theorem provers were limited. The kinds of theorems that mathematicians really cared about required too much complexity and creativity. Mathematical research continued as it always had, unaffected and undeterred.

Now that’s starting to change. Over the past few years, mathematicians have used machine learning models to uncover new patterns, invent new conjectures, and find counterexamples to old ones. They’ve created powerful proof assistants both to verify whether a given proof is correct and to organize their mathematical knowledge.

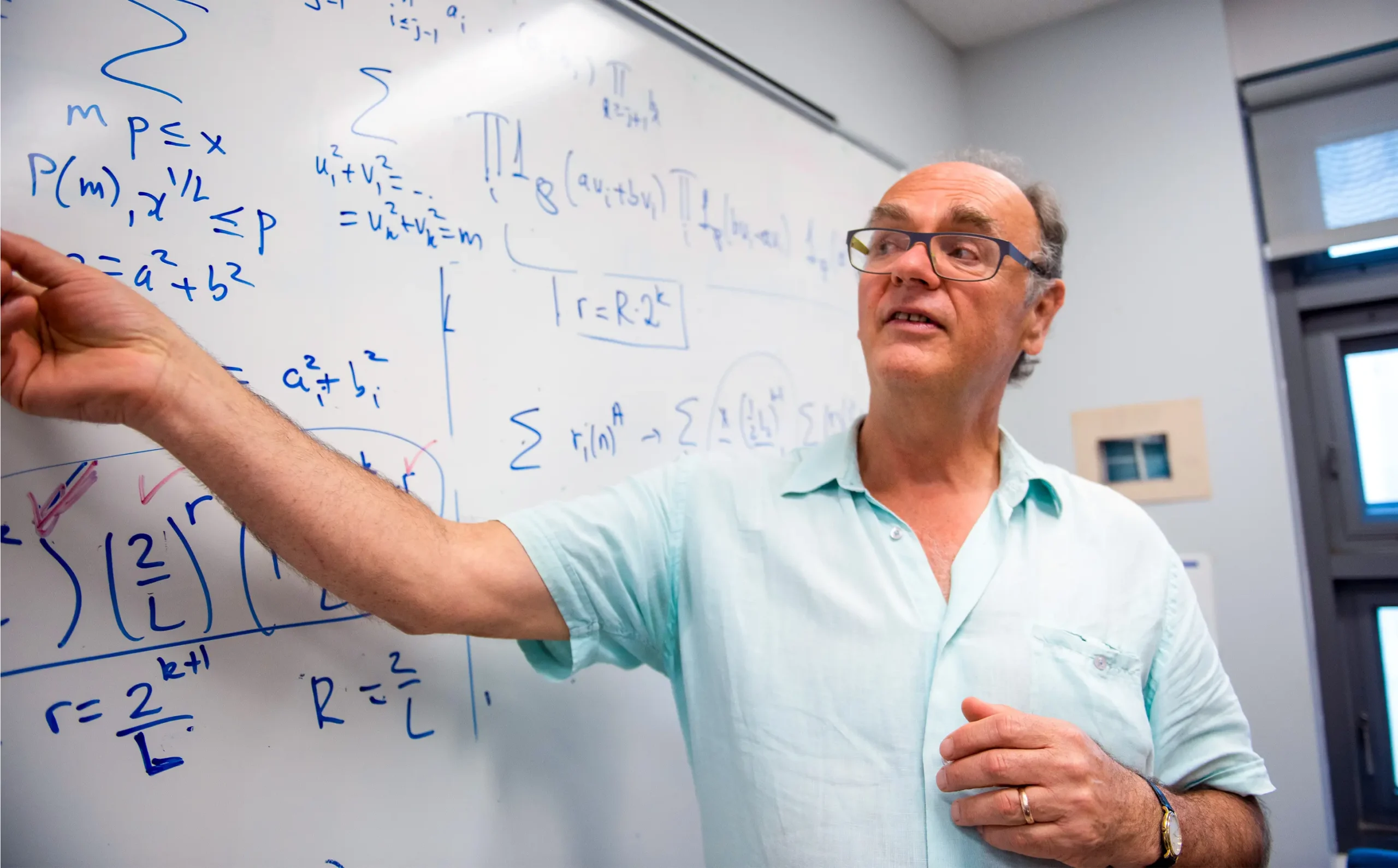

They have not, as yet, built systems that can generate the proofs from start to finish, but that may be changing. In 2024, Google DeepMind announced that they had developed an AI system that scored a silver medal in the International Mathematical Olympiad, a prestigious proof-based exam for high school students. OpenAI’s more generalized “large language model,” ChatGPT, has made significant headway on reproducing proofs and solving challenging problems, as have smaller-scale bespoke systems. “It’s stunning how much they’re improving,” said Andrew Granville, a mathematician at the University of Montreal who until recently doubted claims that this technology might soon have a real impact on theorem proving. “They absolutely blow apart where I thought the limitations were. The cat’s out of the bag.”

Researchers predict they’ll be able to start outsourcing more tedious sections of proofs to AI within the next few years. They’re mixed on whether AI will ever be able to prove their most important conjectures entirely: Some are willing to entertain the notion, while others think there are insurmountable technological barriers. But it’s no longer entirely out of the question that the more creative aspects of the mathematical enterprise might one day be automated.

Andrew Granville worries that outsourcing more rigorous aspects of mathematics to AI could adversely affect researchers’ ability to think. “I feel that my own understanding is not from the bigger picture,” he said. “It’s from getting your hands dirty.”

Alex Tran for Quanta Magazine

Even so, most mathematicians at the moment “have their heads buried firmly in the sand,” Granville said. They’re ignoring the latest developments, preferring to spend their time and energy on their usual jobs.

Continuing to do so, some researchers warn, would be a mistake. Even the ability to outsource boring or rote parts of proofs to AI “would drastically alter what we do and how we think about math over time,” said Akshay Venkatesh, a preeminent mathematician and Fields medalist at the Institute for Advanced Study in Princeton, New Jersey.

He and a relatively small group of other mathematicians are now starting to examine what an AI-powered mathematical future might look like, and how it will change what they value. In such a future, instead of spending most of their time proving theorems, mathematicians will play the role of critic, translator, conductor, experimentalist. Mathematics might draw closer to laboratory sciences, or even to the arts and humanities.

Imagining how AI will transform mathematics isn’t just an exercise in preparation. It has forced mathematicians to reckon with what mathematics really is at its core, and what it’s for.

What Tools Wrought

Socrates was among the first thinkers in the West to worry about how technology could work against clear thinking. In his view, the increasingly widespread technology of writing was an unreliable vehicle for information, one that would erode people’s innate ability to remember.

Today, we could not do mathematics without writing. “Paper functions as an external memory, a different type of thinking system,” Venkatesh said. It has allowed people to store knowledge and pass it down; even decisions about which symbols to use to represent mathematical concepts have led to important advances.

In the time of Socrates, and for the next 2,000 years, Western mathematics was geometry. The ancient Greeks characterized math as the science of magnitude, of things you can draw and measure and count. By representing concepts geometrically, mathematicians could find meaning and discover applications. The work was physical, even tactile.

Then, in 1637, the French mathematician René Descartes published his treatise Discourse on the Method. In its appendix, he introduced what would later be known as Cartesian coordinates: a way to translate geometric curves and shapes into algebraic equations. Algebra became a means of outsourcing certain problems that couldn’t be solved geometrically. But it also meant moving away from a physical understanding of math. “You can slog through the algebra, but you’re working blind in a way,” said Jeremy Gray of the Open University in England. “Plugging and chugging doesn’t have the visual quality that a lot of Euclidean geometry does. It’s powerful, but not so charming.”

In the 17th century, René Descartes introduced a way to represent geometric curves as algebraic equations. It transformed how mathematics was done.

Public Domain

Some mathematicians felt that algebraic methods were taking mathematics in the wrong direction. Chief among them was Isaac Newton. These approaches, he wrote, were “so tedious and entangled as to produce nausea.” To him, geometric intuition was central to what it meant to truly understand something mathematically. By working in terms of algebraic symbols and abstractions, he claimed, mathematicians could not possibly understand what they were doing, even if they thought they did.

At first, algebraic techniques were a means to an end. They simply enabled mathematicians to think about and solve certain problems. But soon mathematicians started studying these techniques for their own sake, leading to all sorts of beautiful math: Calculus, for instance, would have been unimaginable without algebra. This, in turn, led to formalisms such as set theory — the foundation of modern mathematics — which then opened up entirely new areas of study. (Newton, one of the inventors of calculus, originally developed an algebraic framework for it. But because of his philosophical views, he refused to publish his work until he found a way to present it geometrically.)

Mathematics gradually shifted away from its geometric roots and moved toward greater and greater abstraction. “Today the notion that mathematical truth is grounded in geometry seems quaint,” Jeremy Avigad of Carnegie Mellon University wrote in a 2022 essay. Mathematicians now outsource problems to all sorts of methods, both algebraic and not; they often refer to these methods, which get much more complicated than algebraic equations, as mathematical “machinery.” What was once an unacceptable abstraction is now no more mysterious than a power tool, and just as essential for building anything ambitious.

To Know Every Nail

To build a proof, mathematicians start with a sturdy foundation of assumptions, or axioms. They place bricks on top of this foundation one at a time — statements, or lemmas, that eventually come together to help form a single logical structure.

Ultimately, it’s this overarching structure that matters: the walls and stairs and columns that give the proof its shape. But while the most interesting aspect of a proof might be its blueprint — the general design of the argument — the bricks themselves matter, too. Lemmas are minor statements that also need to be proved true, then combined in clever ways, to construct the full proof.

La Géométrie, a short appendix that appeared in Descartes’ famous Discourse on the Method, laid the foundations of analytic geometry. By introducing the notion of a coordinate system, Descartes built a bridge between geometry and algebra that would later make it possible to develop calculus and other important areas of math.

Public Domain

A lemma might involve generalizing a known statement, for instance, or showing that an object satisfies a particular set of properties. Usually, mathematicians are reasonably confident that such lemmas are true. But proving them rigorously can take a while, anywhere from hours to weeks. They might not require a lot of creativity, but they’re time-consuming, and they can end up comprising pages and pages in a final proof.

In a few years, AI models (paired with formal verification systems to check for accuracy) might be able to prove these lemmas automatically, in the same way that mathematicians currently outsource simple arithmetic to computer programs. If this happens, papers will be easier to write. Mathematics will proceed more quickly, opening up new areas of study at a much faster pace. And math education might change significantly.

In this vision, mathematicians will continue to be the architects of new mathematical cathedrals. But they’ll no longer have to be the construction crew as well, crafting and pounding in every brick, joist and nail.

Yet even this use of AI — to achieve something humans can already do, albeit more slowly — could vastly change mathematics, much as the introduction of algebraic machinery did in the 17th century. To get a sense of what this might look like, Heather Macbeth of Imperial College London has been comparing traditional proofs with proofs of the same theorems written using the proof assistant Lean. In a Lean proof, all the steps are written in computer code, some by hand, others using AI; a software program then verifies that the steps follow a valid chain of logic, and that the proof is correct. Macbeth has found that in such a proof, you can package more information into automated processes called “tactics,” freeing up mathematicians to focus on higher-level descriptions and understanding.

“Even in what we think of as proof-based mathematics, the bread and butter of grown-up research mathematics, there’s still a lot in there that is actually computation,” Macbeth said. As these parts of the proof get outsourced to computers, whether to Lean or some other AI system, mathematicians can spend more energy on providing explanations and conveying the most important ideas; there will be less of an emphasis on detail, because the nitty-gritty will become the purview of the AI. That would represent a significant culture shift in mathematics: Mathematicians would no longer have to concern themselves as much with rigor.

“There would be some kind of divorce between rigor on the paper and rigor in your head,” said Daniel Litt of the University of Toronto. “I would understand something new in kind of a holistic sense, even if I wouldn’t understand all the details.”

Yet mathematicians are, on the whole, preternaturally disposed by temperament or training to concern themselves with rigor. “I do not like the feeling of not understanding the details, so I would have to come to peace with that feeling,” Litt said.

For millennia, Euclid’s Elements of Geometry profoundly influenced how mathematicians thought about rigor. But over the past 400 years, math has become increasingly abstract.

Public Domain

That feeling isn’t just discomfort; mathematicians worry (much as Socrates did) that if they stop emphasizing rigor, it could have negative effects on their ability to think. “You may say, well, then, OK, you’re free to look at the bigger picture. But I feel that my own understanding is not from the bigger picture, it’s from getting your hands dirty,” Granville said. “The power of abstraction is best in the hands of people who also understand the practical.”

Addition by Division

Many mathematicians may feel uneasy not understanding every last detail in their proofs, but we already have examples of highly successful research programs in which no one person can hold every detail in their heads. In particle physics, for example, there were more than 3,000 authors on the papers that announced the discovery of the Higgs boson, the crowning achievement of a scientific experiment that took decades of effort and billions of dollars. Even in math, over the course of the 20th century more than 100 researchers contributed to a massive proof — filling over 10,000 pages — that classified important objects called finite simple groups.

AI stands to make this kind of mathematical project the rule rather than the exception, to transform the subject into large collaborations that emphasize experimentation and make it possible to ask questions that couldn’t be asked before.

In fall 2024, to demonstrate what that future might look like, Terence Tao of the University of California, Los Angeles launched the equational theories project. First, he considered a simple mathematical object called a magma: a set of elements, along with a rule for combining any two elements in the set to make a third. (That rule might be addition, or multiplication, or a less familiar operation. One of the most central objects in math, called a group, is a particular kind of magma.)

Tao then came up with thousands of statements that described how the elements in any given magma might behave. For instance, the elements might be commutative, meaning that the order in which you combine them doesn’t matter. Or combining an element with itself might always give you the same element. Each statement “by itself is a boring object,” Tao said. But he wanted to understand how all these statements related to each other. If a magma satisfied one statement, it might also have to satisfy a slew of additional statements, but not others.

There were 22 million possible implications among all the statements, and Tao wanted to assess whether each and every one of them was true or false. “You’re looking at the landscape of these equations as a big thing, and you’re studying that landscape in itself,” said Adam Topaz of the University of Alberta, who was not involved in the project. “Without computers, we couldn’t do that.”

Within a couple of months, more than 50 participants, many of them amateurs, had proved the truth or falsehood of nearly all 22 million implications. Some used AI; others solved implications by hand. Ultimately, the project, which was completed in April 2025, opened up a new area of mathematical questioning that otherwise wouldn’t have seemed interesting at all.

This provides a glimmer of what AI-assisted mathematical research will look like. “In the future, when we explore an area of mathematics, you could first get an AI to explore millions of problems and get some preliminary view of the landscape,” Tao said. “Just very low-quality. But this will guide the more sophisticated humans to say, ‘OK, now, based on this sort of experiment, we should direct our human resources toward this.’”

“It’s a more scattershot approach,” said Maia Fraser of the University of Ottawa. Or, as Rodrigo Ochigame, an anthropologist and historian at Leiden University in the Netherlands, put it, “it has this kind of undisciplined, experimental, bootstrapping character.”

“We’d be more like physicists,” Granville said. “We’d have a tendency to speak our guess first, then hope it’s right. It’s a very different approach, where you’re wrong a lot of the time and you just occasionally hope you’re going to hit the mark.”

The mathematician Terence Tao proposed his “equational theories project” to test what a more collaborative, experimental, AI-powered future might look like.

Reed Hutchinson

Like physics and other laboratory sciences, then, mathematics might also involve more division of labor. Currently, a mathematician is responsible for performing all mathematical tasks from start to finish: coming up with new ideas, proving lemmas and theorems, writing up proofs, and communicating them. That’s very likely to change with AI. Some mathematicians might continue to do math by hand, where there are gaps in the AI systems’ abilities. Other mathematicians might be responsible for developing theories to test, or translating conjectures into the language of computers so that the AI and verification systems can be put to work, or making sure that what the AI is proving is actually what the mathematicians want to prove (an incredibly difficult task), or coordinating among the project’s many collaborators, or explaining automated proofs to others. “In physics or chemistry, you have people who come up with theories, you have people doing experiments, and both value the other side,” said Johan Commelin, a mathematician at Utrecht University and the Lean Focused Research Organization. “It might start looking more like that.”

“We will see more group projects where no single person knows everything that’s going on, but where people can collectively accomplish a lot more than any individual person can,” Tao said. “Which is how the rest of the modern world works.”

The End of Mathematics?

It might be surprising to learn that what counts as a significant mathematical question is often a matter of taste. In a profound way, the question “What is math?” is the same as the question “What do mathematicians think is important?”

According to Venkatesh, the easier it gets to solve certain mathematical problems, the less mathematicians will value those problems. “It’s like high fashion,” said Alex Davies, a researcher at DeepMind. “Once you have the technology to make high fashion very easily available to people, and it becomes mass-market, then it becomes less fashionable. And the high-fashion people go and do something else.”

AI systems might be better suited to certain problems, making those problems inherently less interesting. It’s unclear which subjects could fall first. Problems about finding optimal solutions to functions, for instance, were once a more central part of pure math, deeply intertwined with calculus, algebra and other fields. But in the mid-20th century, with the development of computer-based techniques, optimization proofs tended to get reduced to computations. The focus shifted to applications of these techniques, and so today, while optimization problems are still important, they belong more to the realm of applied math — a field that, rather than involving the study of ideas or concepts for their own sake, aims to use them as a means toward a specific, practical end.

AI might be particularly suited to questions in optimization and other fields that tend to be more concrete, or that tend to use the same kinds of proof techniques repeatedly. Some mathematicians, for instance, think that AI might transform combinatorics, the mathematical study of counting. Other mathematicians think the easiest subjects to automate will be those that involve more symbolic representations, such as algebra.

Still, if AI gets good enough to solve problems in some of these fields, there might be nothing to stop it from eventually getting good at problems in all fields. In that future, mathematicians could be left to focus their attention on other things. “I believe that AI will be able to prove a lot of things, but that mathematicians are just going to shift the level of abstraction that they work in,” Topaz said.

In working with AI, for instance, Litt has come to realize that a lot more math than he’d been aware of is about knowing a huge collection of facts and putting them together in interesting ways — rather than having a eureka moment. “I somehow understood that a lot of what I think about as difficult is more about your working memory and knowledge, rather than creativity,” he said. “It shook my confidence that I understand what it is we do or what makes a good mathematician. Now I think that most of what I do is putting together some facts that are known in standard ways. And then every once in a while, there’s actually a creative part of what I do. I make an analogy or develop a new definition.”

This means that “a lot of what I do is something that’s within reach of a machine,” he added.

Perhaps mathematicians will instead spend most of their time trying to understand the proofs the AI system generates — a task that will require a great deal of time, effort and ingenuity. Mark Kisin, a mathematician at Harvard University, foresees the field shifting to more closely resemble the humanities, perhaps anywhere in the next 10 to 100 years. “If you look at a typical English department at a university, it’s not usually staffed by people who write literature,” he said. “It’s staffed by people who critique literature.” Similarly, he said, mathematicians might assume the role of critics who closely analyze AI proofs and then teach them in seminars. (Ronen Eldan, a mathematician who recently left the Weizmann Institute of Science for OpenAI, recalls a conversation in which another mathematician predicted that “mathematicians will be like pianists today,” he said. “They don’t play their own compositions, but people still come to hear them.”)

Even then, there will be plenty for mathematicians to do, from coming up with new definitions and abstractions to deciding which new research directions will be most interesting to pursue. “It’s hard for me to imagine the fundamental creative work of guiding the program of mathematics as happening by anybody other than humans,” said Emily Riehl of Johns Hopkins University.

Still, the potential changes that mathematicians envision are profound. “It will in some sense be the end of research mathematics as it’s currently practiced,” Litt said. “But that doesn’t mean it will be the end of mathematicians.”

“I think that would be a blow to my ego, but I don’t think I would be super upset about it,” he added. “If there’s a large language model that can prove the Riemann hypothesis and explain the proof to me, I would still very happily learn it. Mostly, what I want to do in math is understand what’s true and why it’s true.”

“For the last 50 years, we were sort of in a stationary environment. We could keep doing what we were doing,” Venkatesh said. “But we can’t do that anymore.”